The Organisation for Economic Co-operation and Development (OECD) launched the AI Capability Indicators, a first-of-its-kind framework to systematically measure artificial intelligence (AI) progress against nine domains of human capability.

Developed over five years with contributions from over 50 experts in AI, psychology, and education, this beta framework offers policymakers, educators, and employers an evidence-based tool to assess AI’s societal impacts and prepare for its transformative potential.

We went through the report.

- 1 Purpose and Utility of the AI Capability Indicators

- 2 Key Findings and Themes

- 3 Broader Significance and Takeaway

- 4 A Landmark Contribution to Global AI Governance

-

5 FAQ: OECD AI Capability Indicators

- 5.0.1 1. What are the OECD AI Capability Indicators?

- 5.0.2 2. What are the nine core capability domains covered by the AI Capability Indicators?

- 5.0.3 3. Why were the AI Capability Indicators developed?

- 5.0.4 4. How were the AI Capability Indicators developed?

- 5.0.5 5. What is the current state of AI capabilities according to the indicators?

- 5.0.6 6. What does the “jagged frontier” of AI capability mean?

- 5.0.7 7. How can the AI Capability Indicators be used in education?

- 5.0.8 8. How do the AI Capability Indicators inform workforce planning?

- 5.0.9 9. What are the limitations of the AI Capability Indicators?

- 5.0.10 10. How will the AI Capability Indicators be updated?

- 5.0.11 11. How do the AI Capability Indicators address ethical concerns?

- 5.0.12 12. Can the AI Capability Indicators measure progress toward Artificial General Intelligence (AGI)?

- 5.0.13 13. Who can use the AI Capability Indicators, and how?

- 5.0.14 14. How can stakeholders provide feedback on the AI Capability Indicators?

- 5.0.15 15. Where can I find more information?

Purpose and Utility of the AI Capability Indicators

The OECD AI Capability Indicators are designed with three core objectives:

- Comprehensiveness: They map AI capabilities to human abilities across cognitive, perceptual, and physical domains, providing a holistic view of AI’s development.

- Trackability: A five-level scale tracks AI’s progression from basic to human-equivalent performance, enabling longitudinal monitoring of advancements.

- Policy Relevance: By linking AI capabilities to occupational and educational demands, the indicators guide strategic decisions on workforce training, curriculum design, and ethical governance.

Unlike traditional AI benchmarks (e.g., MMLU), which focus on narrow performance metrics, this framework compares AI to human abilities in real-world contexts, making it accessible to non-technical audiences and relevant for policy applications.

Key Findings and Themes

1. A Framework Grounded in Human Psychology

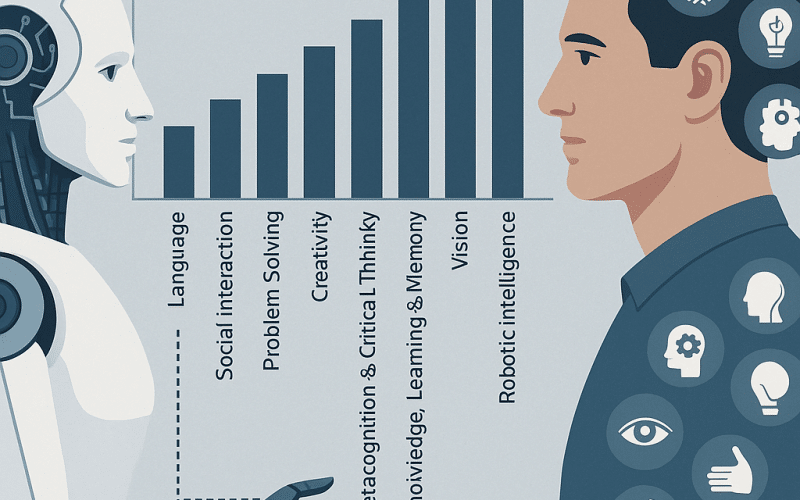

The indicators cover nine domains of human ability, each assessed on a five-level scale:

- Language

- Social Interaction

- Problem Solving

- Creativity

- Metacognition and Critical Thinking

- Knowledge, Learning, and Memory

- Vision

- Manipulation

- Robotic Intelligence

As of November 2024, cutting-edge AI systems, such as large language models (LLMs) like GPT-4o, generally operate at levels 2 to 3 across these domains.

For instance, LLMs excel in language tasks (level 3), generating semantically meaningful text across multiple languages, but struggle with robust analytical reasoning and hallucination issues. Similarly, robotic systems in manipulation and robotic intelligence are limited to level 2, performing well in controlled environments but lacking adaptability in dynamic or unstructured settings. Level 5, representing full human equivalence, remains elusive across all domains, showing AI’s current limitations in generalization, ethical reasoning, and real-time adaptability.

Overview of Current AI Capability Levels (November 2024)

| Domain | Level (1–5) | Capability Description |

|---|---|---|

| Language | 3 | AI systems reliably understand and generate semantic meaning using multi-corpus knowledge. They show advanced logical and social reasoning ability, process text, speech, and images, support diverse languages, and adapt through iterative learning techniques. |

| Social Interaction | 2 | AI systems combine simple movements to express emotions, learn from interactions, recall events, adapt slightly based on experience, recognize basic signals, detect emotions through tone and context, and apply past experiences to recurring challenges. |

| Problem Solving | 2 | AI systems integrate qualitative reasoning (e.g., spatial or temporal relationships) with quantitative analysis to address complex professional problems framed using conventional domain abstractions, predicting system evolution over time. |

| Creativity | 3 | AI systems generate valuable outputs that deviate significantly from training data, challenge traditional boundaries, generalize skills to new tasks, and integrate ideas across domains. |

| Metacognition and Critical Thinking | 2 | AI systems monitor their own understanding, adjust approaches, handle partially incomplete information, and discern what they know and do not know, requiring measured confidence and informed guesses. |

| Knowledge, Learning, and Memory | 3 | AI systems learn semantics through distributed representations, generalize to novel situations, and process massive datasets for context-sensitive understanding, but lack real-time learning capabilities. |

| Vision | 3 | AI systems handle variations in target object appearance and lighting, perform multiple subtasks, and cope with known variations in data and situations. |

| Manipulation | 2 | AI systems handle varied object shapes and moderately pliable materials in controlled environments with low to moderate clutter, navigate small obstacles, and perform tasks without time constraints. |

| Robotic Intelligence | 2 | Robotic systems operate in partially known, mostly static, semi-structured environments, handling simple multi-function tasks with inherent uncertainty and minimal human interaction, with little to no ethical issues. |

Source: OECD (2025), p. 14.

2. Implications for Skills and Vocational Education and Training (VET)

The framework offers actionable insights for education and workforce planning:

- Gap Analysis: By mapping AI capabilities to occupational requirements (e.g., using the O*NET database), stakeholders can identify tasks AI can perform and those requiring uniquely human skills, such as nuanced social interaction or ethical decision-making. For example, teaching tasks like “adapting instructional materials to students’ needs” demand level 4–5 capabilities in language, problem solving, and social interaction, which AI cannot yet fully replicate.

- Curriculum Design: The indicators highlight the need to prioritize skills AI struggles with, such as creativity, metacognition, and ethical judgment. For instance, education systems may shift focus toward fostering emotional intelligence and critical thinking to prepare students for roles where human judgment remains essential.

- Future-Proofing: VET programs should emphasize dynamic motor control, interpersonal skills, and open-ended problem-solving to ensure resilience against automation. The indicators provide a roadmap for identifying “resilience zones” where human skills retain a competitive edge.

3. The Jagged Frontier of Automation

The OECD describes AI’s progress as a “jagged frontier,” reflecting uneven development across domains. For example:

- Advanced Domains: AI excels in pattern recognition (e.g., vision systems for autonomous vehicles at level 3) and predictive text generation (e.g., LLMs at level 3 for language tasks).

- Lagging Domains: Capabilities like manipulation (level 2, limited to controlled environments) and ethical decision-making in robotic intelligence (level 2) remain underdeveloped, requiring significant human oversight.

This uneven progress suggests task-level, rather than job-level, transformation. For instance, AI can automate 60% of a legal assistant’s research tasks but cannot replicate the empathetic engagement of a social worker. By mapping AI capabilities to specific tasks, the indicators help policymakers identify where human-AI collaboration can thrive and where automation is feasible, guiding targeted reskilling efforts.

4. Transparent and Evolving Methodology

Released in beta form, the indicators invite feedback from AI researchers, psychologists, and policymakers to refine their accuracy and relevance.

The OECD commits to:

- Iterative Updates: Regular updates will incorporate new benchmarks and expert input, with the first revision planned for 2026

- Global Relevance: The framework supports multilingual and context-aware applications, ensuring applicability across diverse economic and cultural contexts

- Stakeholder Engagement: An online repository allows researchers to submit benchmarks, fostering collaborative refinement.

This open approach ensures the indicators remain a dynamic tool, responsive to rapid AI advancements and societal needs.

Broader Significance and Takeaway

The OECD AI Capability Indicators are a values-based framework that prompts critical reflection on AI’s role in society:

- What should humans uniquely do? The indicators highlight capabilities like ethical reasoning and social interaction, where humans retain a distinct advantage, guiding decisions on where to preserve human agency.

- Which skills define a meaningful life in the AI age? By identifying areas where AI falls short, the framework underscores the importance of creativity, empathy, and critical thinking as enduring human strengths.

- How should we educate for an AI-driven world? The indicators provide a lens for reimagining education, emphasizing skills that complement AI and prepare students for a future where machines share cognitive tasks.

A Landmark Contribution to Global AI Governance

The OECD’s AI Capability Indicators provide a standardized, evidence-based framework for assessing artificial intelligence progress in relation to human abilities. By defining nine core capability domains and applying a five-level rating scale, the indicators allow policymakers, educators, and employers to track AI development systematically.

The tool supports anticipatory governance by identifying which human skills are currently most susceptible to automation, and which remain resistant. It also informs curriculum reform and vocational training by highlighting areas where human labor retains a comparative advantage.

As such, the framework contributes directly to data-driven policy development in education, workforce planning, and ethical AI deployment.

FAQ: OECD AI Capability Indicators

1. What are the OECD AI Capability Indicators?

The OECD AI Capability Indicators are a pioneering framework developed by the Organisation for Economic Co-operation and Development (OECD) to systematically measure artificial intelligence (AI) capabilities against nine domains of human ability. Launched in 2025, the indicators provide policymakers, educators, and employers with an evidence-based tool to assess AI’s progress and its societal impacts, particularly in education, work, and public policy.

2. What are the nine core capability domains covered by the AI Capability Indicators?

The indicators assess AI across the following nine human ability domains:

- Language

- Social Interaction

- Problem Solving

- Creativity

- Metacognition and Critical Thinking

- Knowledge, Learning, and Memory

- Vision

- Manipulation

- Robotic Intelligence

Each domain is evaluated on a five-level scale, from basic tasks (Level 1) to human-equivalent performance (Level 5).

3. Why were the AI Capability Indicators developed?

The indicators were created to address the gap in understanding AI’s capabilities and their implications for society. They aim to:

- Provide a comprehensive assessment by linking AI capabilities to human abilities.

- Enable trackability of AI progress over time using a five-level scale.

- Offer policy-relevant insights to guide decisions on education, workforce training, and ethical governance.

Unlike traditional AI benchmarks (e.g., MMLU), the indicators focus on real-world applications and are understandable to non-technical audiences.

4. How were the AI Capability Indicators developed?

The indicators were developed over five years by the OECD’s Artificial Intelligence and Future of Skills (AIFS) project, involving over 50 experts in AI, psychology, and education. The methodology included:

- Constructing five-level scales for each domain, grounded in human psychology.

- Using available benchmarks, competitions, and expert judgments to assess AI performance.

- Conducting a structured peer review in late 2024 with 25 researchers to refine the framework.

- Releasing the indicators in beta form to invite further feedback .

5. What is the current state of AI capabilities according to the indicators?

As of November 2024, cutting-edge AI systems operate at levels 2 to 3 across the nine domains:

- Level 3: Language (e.g., LLMs like GPT-4o generate multi-lingual text), Creativity (e.g., novel outputs like AlphaZero’s strategies), Knowledge, Learning, and Memory (e.g., processing large datasets), and Vision (e.g., autonomous vehicle navigation).

- Level 2: Social Interaction (e.g., basic emotion detection), Problem Solving (e.g., logistics planning), Metacognition and Critical Thinking (e.g., basic self-monitoring), Manipulation (e.g., pick-and-place tasks), and Robotic Intelligence (e.g., robotic delivery systems).

No AI system reaches level 4 or 5, indicating limitations in achieving human-like generalization, adaptability, and ethical reasoning.

6. What does the “jagged frontier” of AI capability mean?

The “jagged frontier” refers to the uneven progress of AI across different domains. For example, AI excels in language and vision (level 3) but lags in social interaction and manipulation (level 2). This suggests that automation will impact specific tasks within jobs rather than entire occupations, necessitating targeted policy responses for reskilling and human-AI collaboration.

7. How can the AI Capability Indicators be used in education?

The indicators help education policymakers:

- Identify Transformational Shifts: They highlight where AI can perform teaching tasks (e.g., delivering instruction) and where human skills like empathy are essential, potentially reshaping teacher roles.

- Revise Curricula: They suggest prioritizing skills AI struggles with, such as creativity (level 5, Table 3.4), critical thinking (level 5, Table 3.5), and ethical judgment, to prepare students for an AI-driven future.

- Conduct Gap Analysis: By mapping AI capabilities to educational tasks, policymakers can identify areas for human-AI collaboration.

8. How do the AI Capability Indicators inform workforce planning?

The indicators enable:

- Occupational Mapping: By linking AI capabilities to job requirements (e.g., using O*NET), policymakers can identify tasks AI can automate and those requiring human skills. For example, legal research tasks may be automated (level 3 language, Table 3.1), but social work tasks require level 5 social interaction (Table 3.2).

- Reskilling Strategies: They highlight “resilience zones” where human skills like ethical reasoning and dynamic motor control remain essential, guiding vocational education and training (VET) programs.

- Human-AI Collaboration: They identify tasks where AI and humans can complement each other, such as in teaching or healthcare.

9. What are the limitations of the AI Capability Indicators?

The beta indicators have several limitations:

- Uneven Benchmarks: Domains like language and vision have robust benchmarks, while social interaction and creativity rely heavily on expert judgment due to limited formal assessments.

- Generalization Challenges: The indicators reflect the state-of-the-art but may not account for specific system limitations.

- Need for Refinement: As a beta framework, the indicators require further standardization, additional evidence, and stakeholder feedback to enhance precision.

10. How will the AI Capability Indicators be updated?

The OECD plans to:

- Implement regular updates starting in 2026, incorporating new benchmarks and expert input.

- Monitor scientific literature for emerging tests and AI advancements.

- Launch an expert survey in 2026, modeled on the University of Chicago Economic Experts Panel, to assess unbenchmarked capabilities.

- Develop new benchmark tests and competitions to address gaps, with initial workshops planned for 2026 (OECD, 2025, pp. 24–25).

An online repository allows researchers to submit benchmarks for review.

11. How do the AI Capability Indicators address ethical concerns?

The indicators help identify AI capabilities that raise ethical issues, such as autonomy in high-stakes domains (e.g., healthcare, warfare). For example, Table 3.9 (Robotic Intelligence Scale) notes that level 5 robots can make ethical decisions, a capability current systems (level 2) lack, necessitating human oversight. The framework supports discussions on safety, fairness, and accountability in AI deployment.

12. Can the AI Capability Indicators measure progress toward Artificial General Intelligence (AGI)?

Yes, the indicators provide a framework for tracking progress toward AGI, defined as AI matching the full range of human cognitive and social abilities. Level 5 across all domains represents human-level general intelligence. By comparing AI’s progress across domains, the indicators offer a systematic, evidence-based approach to assess AGI claims, avoiding abstract definitions.

13. Who can use the AI Capability Indicators, and how?

The indicators are designed for:

- Policymakers: To inform AI governance, education reform, and labor policies.

- Educators: To redesign curricula and teaching practices.

- Employers: To plan workforce training and human-AI collaboration.

- Researchers: To develop new benchmarks and evaluate AI progress.

The OECD plans to enhance usability through interactive formats, searchable databases, and real-world use case collections.

14. How can stakeholders provide feedback on the AI Capability Indicators?

Stakeholders, including AI researchers, psychologists, and policymakers, are invited to provide feedback via the OECD’s online repository. Submitted benchmarks and evaluations will be reviewed by the OECD and its expert group to refine the indicators for the first full release post-2025.

15. Where can I find more information?

For detailed insights, refer to:

- OECD (2025), Introducing the OECD AI Capability Indicators, OECD Publishing, Paris.

- The technical companion report: OECD (2025), AI and the Future of Skills Volume 3: The OECD AI Capability Indicators.

- The OECD AI-WIPS programme.